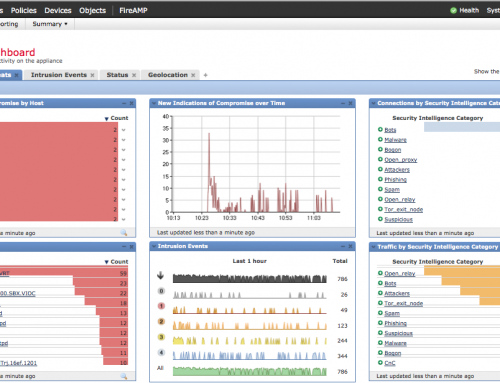

I have run into the “Database Integrity Check Failed on FirePOWER” issue on the Management Center when I was trying to back up or upgrading the Management Center to a newer version. The fact is that the system will run a DB integrity check before it performs any upgrades or even backup tasks. If the Database integrity check fails, it’ll result in a fatal error like shown below. The Management Center was formally called FireSIGHT Defense Center. They are essentially the same product. I put together my experience and what I did to resolve the issue. Hope it helps you as well.

Update Installation Failed : [ 8%] Fatal error: Error running script 000_start/110_DB_integrity_check.sh

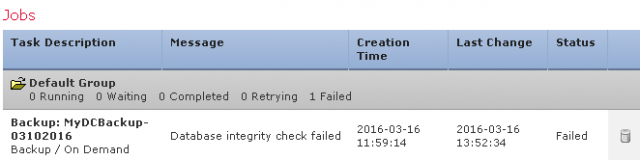

Under System > Monitoring > Task Status

It is a very common issue when you have a lot of evens coming in and processed by the FirePOWER Management Center. Each event comes in, there is a SQL database table entry created. Over time there are millions of entries being created by the system. Any file integrity error will result in DB check to fail.

The good news is that the corruption most time happens to the Event tables. You can attempt to repair them by running SQL DB repair utilities. Most likely they can be repaired. Worst case if it can’t, you can simply delete the corrupted DB record if the one historical Event isn’t very important to you. I will walk you through the process in a bit.

Database integrity check failed on FirePOWER Management Center

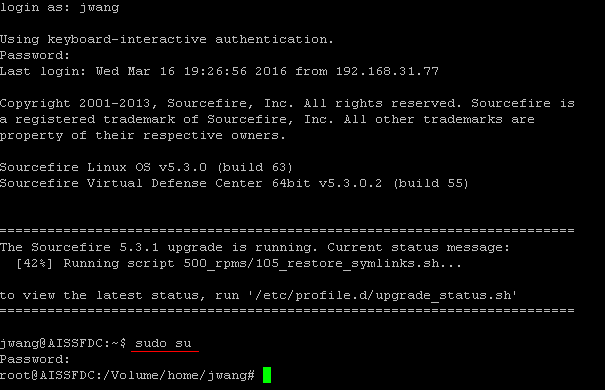

SSH to the FirePOWER Management Center and become Super User

Review Log Files to Find Out Where It Failed

In this example I was trying to upgrade my Management Center for version 5.3.0.2 to 5.3.1. I uploaded the file to the Management Center and tried to run the upgrade. I got an error message as below. If you are looking for upgrade instructions, I have one here. ()

Update Installation Failed : [ 8%] Fatal error: Error running script 000_start/110_DB_integrity_check.sh

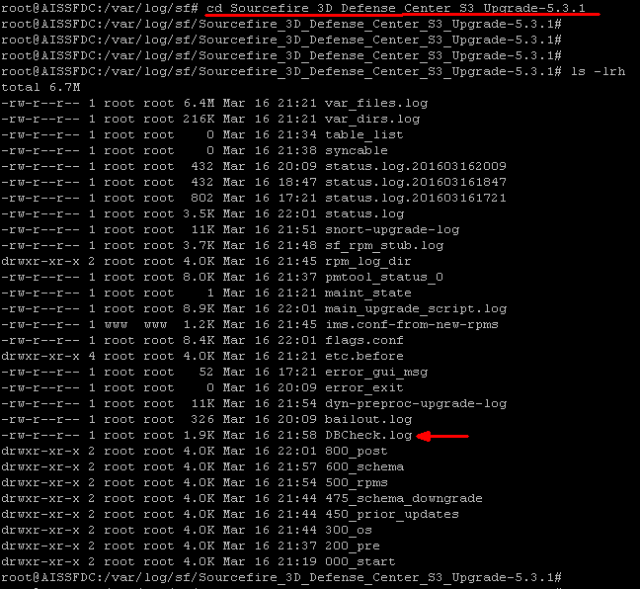

The FirePOWER Management Center will generate a folder in /var/log/sf/ with the same name of the upgrade or patch you were trying to run. This folder may not be generated if the upgrade process failed in its very early stage. In that case, please skip to the next option. In my case the folder was created here.

/var/log/sf/Sourcefire_3D_Defense_Center_S3_Upgrade-5.3.1

We are looking for DBCheck.log.

Open the DBCheck.log file cat ./DBCheck.log. You are looking for the [FATAL] lines, ignore [WARNING]. Here is the output of my DBCheck.log.

OUT: [160316 18:47:43] FAILED 000_start/110_DB_integrity_check.sh OUT: [160316 18:47:43] ==================================== OUT: [160316 18:47:43] tail -n 10 /var/log/sf/Sourcefire_3D_Defense_Center_S3_Upgrade-5.3.1/000_start/110_DB_integrity_check.sh.log OUT: OUT: rna_flow_stats_1458043200 OK; no repair required OUT: Processing table rna_flow_stats_1458044400. OUT: Checking for index file .....found! OUT: Running mysqlcheck on rna_flow_stats_1458044400 OUT: rna_flow_stats_1458044400 OK; no repair required OUT: [Wed Mar 16 18:45:47 2016][FATAL] [table error] table [rna_flow_stats] loadTableInfoFromDB(): 'SHOW CREATE TABLE rna_flow_stats' Failed, DBD::mysql::st execute failed: Can't find file: 'rna_flow_stats_1455819600' (errno: 2) OUT: [Wed Mar 16 18:45:47 2016][FATAL] [missing table] rna_flow_stats OUT: After Checking DB, Warnings: 14, Fatal Errors: 4 OUT: Database Integriy check produced errors OUT: Fatal error: ERROR: Database integrity check failed OUT: OUT: [160316 18:47:44] Fatal error: Error running script 000_start/110_DB_integrity_check.sh OUT: [160316 18:47:44] Exiting. OUT: removed `/tmp/upgrade.lock/UUID'

The output not only revealed the Database integrity check failed on FirePOWER Management Center, it also tells us where it failed at. Looks like we had an issue with table [rna_flow_stats].

If you do not have the log folder and files generated yet because your upgrade process failed in a very early stage, you can review the real-time logs while you attempting to upgrade again.

See real-time update logs:

tail -f /var/log/sf/updates.status

You will see everything the system is trying to do while running the upgrade script. It also includes the outputs as seen in DBCheck.log. Focus on [FATAL] and [FAILED] keywords.

Repair Database Integrity

As we have pin pointed which database table was giving trouble, we can now try to fix the issue. The FirePOWER Management Center comes with a few handle scripts for the exact purpose.

Disable events being transferred

It is always a good idea to temporality disable events being transferred between the sensors and the Management Center while we are trying to work on the database. You don’t want further DB corruptions. Then use the process manager to check all critical services for FirePOWER are running in the background..

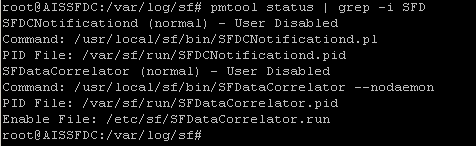

pmtool disablebyid SFDataCorrelator pmtool status | grep -i SFD

You should see the outputs similar to this.

Run Database repair script

Here you can run the “repair_table.pl” script against the table where the “DBCheck.pl” script told us. Replace the table name rna_flow_stats with your table name(s) where failed the check.

repair_table.pl -farms rna_flow_stats

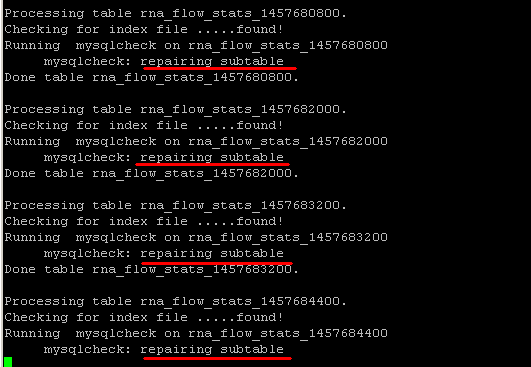

The repair script will go through the entire table and its sub-tables and attempt to fix any issue if may find.

Chances are, after you ran the repair_table.pl script, it’ll fix all the issues. If it failed to repair all the DB integrity issues, you last option is to drop the table completely. If it was a table for an old event, you may not need to keep it anyway.

mysql -padmin sfsnort -e "DROP TABLE rna_flow_stats_1455819600"

Run DBCheck.pl script again

Once the problematic database table(s) has been repaired, you may run the DBCheck.pl script again and make sure there are no further issues.

Restore events processing

Once we have done our troubleshooting and fixed the issues, remember to re-enable event processing engine.

pmtool enablebyid SFDataCorrelator

Upgrade FirePOWER Management Center

Now we have addressed the database integrity check failed on FirePOWER issue. You may proceed to the system upgrade again. You should be able to apply the upgrade successfully. If it fails for some other reasons, you can go back and follow the same process to troubleshoot. You may find the my other guides useful.

How to Backup and Restore FirePOWER Management Center

How to Upgrade SourceFire FirePOWER FireSIGHT Management Center

Jack,

I see your post is over 3 years old, but, its the closest I’ve found to hinting out how to fix my problem.

How were you able to run the repair_table.pl script while the mysql server was running, and hitting the errors of not being able to read the specific table?

I’ve got a situation with my FMCv where I’m trying to repair a table that has become corrupt (table id in dictionary does not match Table ID in header of table).

mysql crashes, and doesn’t give me the chance to run the repair_table.pl script.

If I turn on innodb_force_recovery, then the repair_table.pl script errors out complaining about locks.

How do you start the mysql server without it immediately trying to do its playback of logs and making sure all of its tables are ready for operation?

Also, if the repair_table.pl script doesn’t succeed, what are the steps for starting mysql, dropping the table, and then re-adding the table you just dropped? My understanding is that dropping and re-adding a table just clears the dictionary reference of the table, and then creates a new one make the table id’s match. Is this correct?

I have a TAC case open, but TAC is not helping at the moment… all they want to do is “reimage” and move on, which is not an option.

Mark, I’m not sure there is an easy way of getting the corrupted table fixed. Did you have a configuration backup of the FMC? If so, it is no brainer to just rebuild the FMCv. Sometimes reconfiguring it is faster than trying to restore from a corrupted database. I wish you good luck.

Hello. So this is very helpful. I have a similar problem:

[Fri May 27 06:14:00 2022][FATAL] [missing table] sfreport…

I’am thinking to try your solution…