What is Cisco ACI fabric forwarding? The Cisco Application Centric Infrastructure (ACI) allows applications to define the network infrastructure. It is one of the most important aspects in Software Defined Network or SDN. The ACI architecture simplifies, optimizes, and accelerates the entire application deployment life cycle. The network services include routing and switching, QoS, load balancing, security and etc. In this session, I will explain what is Cisco ACI fabric forwarding.

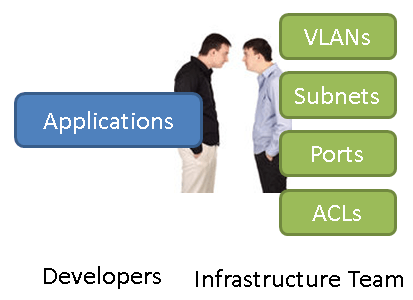

What does ACI Solve?

The Cisco ACI architecture solves the problem between application developers and network engineers. Network connectivity, security and policies are there for the applications. What often happen is that the different mindset communications between two different teams are not quite efficient. A different approach is that a program that can manage the network on the application’s behalf, make configuration a lot simpler. Most importantly, the network would adapt to the application’s needs and changes in an automated fashion. It makes the network infrastructure much easier to manage and scale.

What is the relationship between the applications and the network? An application is not just a VM or a server. It is a collection of all the application end points plus layer 2 to 7 network policies and the relationship between the endpoints and the policies.

I spent some time and put together a list of Cisco Nexus switches that support ACI mode. They are in two categories, fixed switch and modular-based platform. Save some time and download the PDF for free.

Overview of ACI Fabric

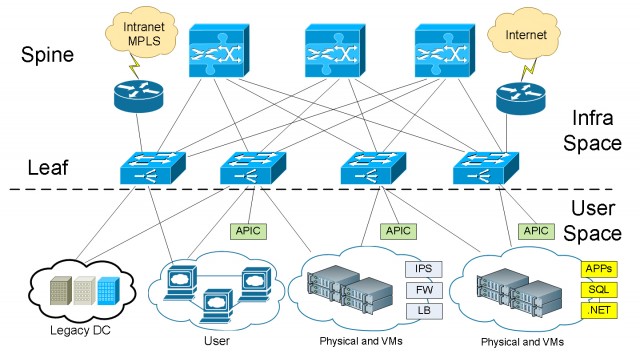

Let’s first understand the basic concepts. Cisco ACI leverages the “Spine” and “Leaf” also known as Clos architecture to deliver network traffic.

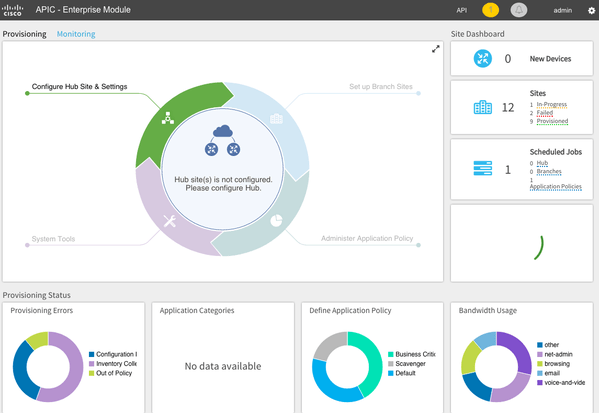

The Cisco Application Policy Infrastructure Controller (APIC) API enables applications to directly connect with a secure, shared, high-performance resource pool that includes network, compute, and storage capabilities.

Application Policy Infrastructure Controller is the heart and brain of the ACI architecture. The APIC is GUI based, where you provision your network and manage network policies around ACI. It is very scalable and reliable. Mostly importantly, APIC supports single API that allows for centralized comprehensive network policy management. What it means is that, you don’t have to run the APIC GUI to manage the ACI. You could run or develop your own 3rd party tools to manage the ACI directly. You could run programs like OpenStack, Cisco UCS Director all these kind of tools to orchestra the network. You can also setup applications that are event based, to make network changes via an API call when there was an event met the criteria.

APIC Clustering

You must have at least three APIC servers to form a cluster. One of the APICs is primary. It is to provide redundancy. All data in one APIC is synchronized across other peers in the cluster. In case one of the APICs fails, one of other APICs will take over. The data is replicated and synchronized across all APICs.

Advantages of Spine and Leaf Architecture

The ACI fabric “Spine and Leaf” architecture offers us a linear scale in both performance and cost’s perspective. With the Spine and Leaf architecture, when you need more servers or device connectivity, you simply add a Leaf. You can add leaves up to the capacity of your Spine. When you need more redundancy or more paths for bandwidth within a fabric, you simply add more Spines.

Basic ACI Fabric Wiring Layout

We typically connect a Leaf to every Spine. A Leaf never connects to another Leaf, as a Spine never connects to another Spine. Everything else in your network connects to one or several Leaves for redundancy and HA.

Within the ACI architecture, there are two different spaces where we are looking at traffic. We have the infrastructure space and the user space. The user space can consist of a single organization, or scaling up to 64,000 tenants or customers from a service provider’s perspective.

Virtual or physical devices such as VM hosts, Firewalls, IPS/IDS appliances, they all connect to the Leaves. We can also connect our external networks. ACI can work with your existing infrastructure. Whatever networks in your existing network, you can connect to the ACI fabric. For example, we connect the Internet and Intranet CE routers to the Leaf layer of the ACI fabric.

ACI Terminologies

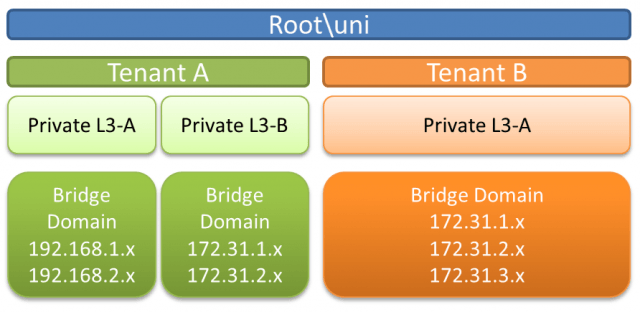

Tenant: Logical Separator for customer, a business unit. It can be different customers from a service provider’s perspective.

Private Network (Layer 3): Equivalent to a VRF, separates routing instances, can be used as a separate admin domain.

Bridge Domain (BD): A container of subnets, can be used to define L2 boundary. But it is not a VLAN.

End Point Group (EPG): Container for objects requiring the same policy treatment. For example, all users on wireless can be in an EPG. A stack of web servers can be in an EPG, requiring the same L2-L7 traffic filtering and load balancing.

This is the logical view of how these components work together.

Shown in above figure, the ACI fabric is managing two Tenants, A and B. These two Tenants are different customers. They are completely separated and one would never see the other’s traffic. Under each Tenant, we can have multiple Private Networks. These networks are on their own routing domain and can have overlapping IP spaces (think of VRF). Within each Private L3 network, you can have multiple subnets. As you can see that the ACI logical model is not much of different than the traditional layer 2, layer 3 networks with VRF. The main differentiator is the ACI leverages the concept of a “container” where all the policies are stored. When a switch port is put in a container, its L2-L7 network characteristics are all set. It gives us great flexibility and scalability.

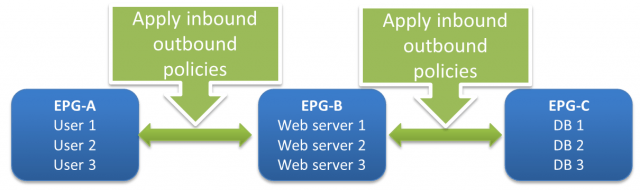

End Point Group (EPG)

An EPG separates the addressing of an application from its mapping and policy enforcement on a fabric. Network security policies, QoS, load balancing occur at per EPG level. Think of using one EPG-A as a container where you put all your web servers in it. And another EPG-B container where you put all your users who need to access the web service in it. And then have an EPG-C container where all the backend Database reside. Among EPG-A, B and C, network service policies are applied. The relationship between two EPGs is called a “Contract”.

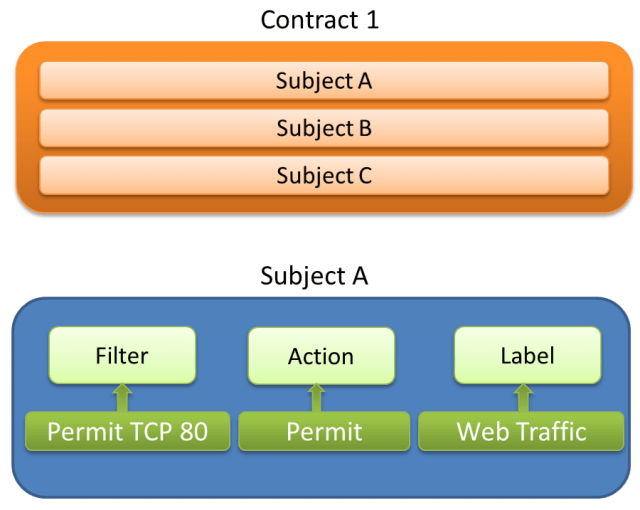

Contract: Defines how an EPG communicates with other EPGs. A Contract is the definition of policies.

Subject: It is used to build definitions of communications between EPGs.

Filter: Identifier for a subject. For example, what traffic you want to take actions on. It is required within a subject.

Action: Actions to be taken on the filtered traffic within a subject. It is required in a subject. The actions can be Permit, Deny, Log, Redirect, Copy and Mark DSCP/CoS.

Contracts are group of subjects which define communications between source and destination EPGs. Subjects are a combination of Filter, Action and Label.

Communications between subjects can be one way or bi-directional. It can be as granular as defining which application can talk to who (IP) on what language (Port). Here is how the EPG to EPG policy looks like:

Internet EPG > Web Farm EPG

Allow TCP 80 and 443

Enforce IPS/IDS

Enforce NAT

Enforce SSL offload

Enforce Load balancing

Web Farm EPG > Application EPG

Allow TCP 5550

Copy IPS/IDS

No NAT

Enforce Load balancing

Application EPG > Database EPG

Allow TCP 1433

Copy IPS/IDS

No NAT

If you need to add more web servers, simply add to the web farm EPG. If you need to add more resources to the DB farm, simply need to add to the DB EPG. No network change needed except for assigning the switch port on the right VLAN.

What is VxLAN

Inside the ACI infrastructure, we utilize VxLAN, or Virtual Extensible LAN.

In a traditional network, VLANs provide logical segmentation of Layer 2 boundaries or broadcast domains. However, due to the inefficient use of available network links with VLAN use, rigid requirements on device placements in the data center network, and the limited scalability to a maximum 4094 VLANs, using VLANs has become a limiting factor to large enterprise networks and cloud service providers as they build large multitenant data centers.

The Virtual Extensible LAN (VxLAN) has a solution to the data center network challenges posed by traditional VLAN technology. The VxLAN standard provides for the elastic workload placement and higher scalability of Layer2 segmentation that is required by today’s application demands. Compared to traditional VLAN, VxLAN offers the following benefits:

- Flexible placement of network segments throughout the data center. It provides a solution to extend Layer 2 segments over the underlying shared network infrastructure so that physical location of a network segment becomes irrelevant.

- Higher scalability to address more Layer 2 segments. VLANs use a 12-bit VLAN ID to address Layer 2 segments, which results in limiting scalability of only 4094 VLANs. VxLAN uses a 24-bit segment ID known as the VxLAN Network Identifier (VNID), which enables up to 16 million VXLAN segments to coexist in the same administrative domain.

- Layer 3 overlay topology. Better utilization of available network paths in the underlying infrastructure. VLAN uses the Spanning Tree Protocol for loop prevention, which ends up not using half of the network links in a network by blocking redundant paths. In contrast, VxLAN packets are transferred through the underlying network based on its Layer 3 header and can take complete advantage of Layer 3 routing, equal-cost multipath (ECMP) routing, and link aggregation protocols to use all available paths.

You can read more about VxLAN technology here: VXLAN Overview: Cisco Nexus 9000 Series Switches

Traffic Flow in ACI Fabric

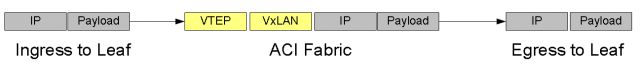

User traffic is encapsulated from the user space into VxLAN and use the VxLAN overlay to provide layer 2 adjacency when need to. So we can emulate the layer 2 connectivity while providing the extensibility of VxLAN for scalability and flexibility.

When traffic comes in to the infrastructure from the user space, that traffic can be untagged frames, 802.1Q trunk, VxLAN or NVGRE. We want to take any of this traffic and normalize them when entering into the ACI fabric. When traffic is received from a host at the Leaf, we translate the frames to VxLAN and transport to the destination on the fabric. For instance we can transport Hyper-V servers using Microsoft NVGRE. We take the NVGRE frames and encapsulate with VxLAN and send to their destination Leaf. We can do it between any VM hypervisor workload and physical devices, whether they are physical bare metal servers or physical appliances providing layer 3 to 7 services. So the ACI fabric gives us the ability to completely normalize traffic coming from one Leaf and send to another (it can be on the same Leaf). When the frames exit the destination Leaf, they are re-encapsulated to whatever the destination network is asking for. It can be formatted to untagged frames, 802.1Q truck, VxLAN or NVGRE. The ACI fabric is doing the encapsulation, de-capsulation and re-encapsulation in line rate. The fabric is not only providing layer 3 routing within the fabric for packets to move around, it is also providing external routing to reach the Internet and Intranet routers.

- All traffic within the ACI Fabric is encapsulated with an extended VxLAN header along with its VTEP, VxLAN Tunnel End Point.

- User space VLAN, VxLAN, NVGRE tags are mapped at the Leaf ingress point with a Fabric internal VxLAN. Note here the Fabric internal VxLAN acts like a wrapper around whatever frame formats coming in.

- Routing and forwarding are done at the Spine level, often utilizes MP-BGP.

- User space identities are localized to the Leaf or Leaf Port, allowing re-user and/or translation if required.

When we look at connecting the existing datacenter networks into ACI, what we do is accepting either typical subnets on any given VRF or a VLAN for any given device externally. We then translate them into the ACI fabric as external entities or groups that could be in parts of the Application Centric Infrastructure that we use building out the logical model.

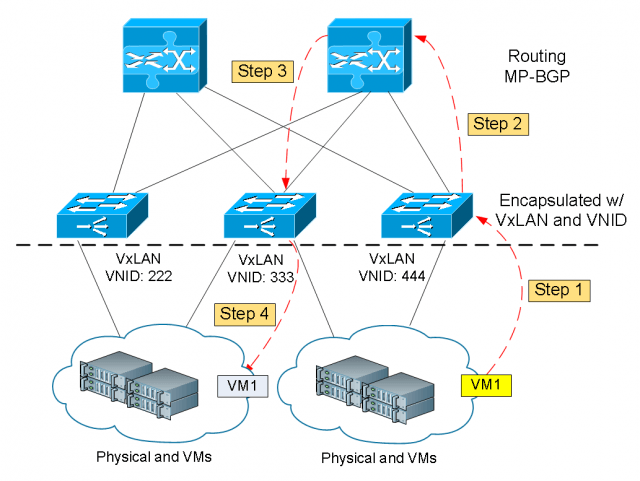

Location Independent

VxLAN not only eliminates Spanning Tree Protocol, it also allows us to have location independent within the fabric. The IP address itself is intended to identify a device for forwarding purpose. Within the ACI fabric, we take a device IP and map it to a VxLAN ID or VNID. It helps us to identify where the packet is located at any given time. What it means is that with any virtual machine host, it is identified by an IP address within that server and the VNID at the ACI Leaf. If this host were to migrate to a VM hypervisor at a different location within our ACI fabric, its VNID is replaced by the destination Leaf’s VNID and forwarded over. Now the ACI fabric knows that the VM with the same IP is the same host, simply relocated to a different location. This allows us to provide very robust forwarding to a device while still maintain the flexibility provided by workload mobility. This gives us a very robust ACI fabric, extremely scalable, and allows us to provide mobility within user space across the infrastructure space at any given end point.

In the diagram above, let’s say we are migrating VM1 from the server farm on the right to the left. These steps are followed:

- Step 1: VM1 is sent to the Leaf where it is directly connected. The frames are normalized and encapsulating into VxLAN format.

- Step 2: VM1 is identified by its IP address within the server and the VxLAN Network Identifier (VNID) of the Leaf it is sitting on right now. The Leaf is then forward the packet to the Spine for forwarding decision making.

- Step 3: The Spine router replaces its VNID with the destination Leaf’s VNID and sends it over.

- Step 4: The destination Leaf receives the packets and strips off the VxLAN wrapper then forward to the new server farm. From the network’s perspective VM1 is still the same host with the same IP address.

ACI Fabric Policy Management and Enforcement

Applying Policies to End Point

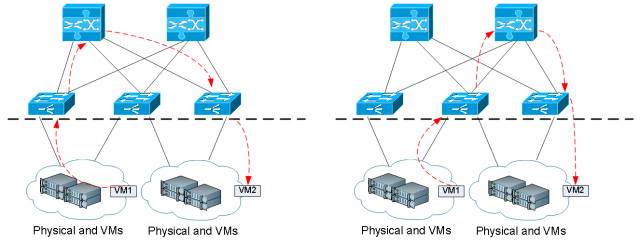

When an End Point attaches to the fabric on a Leaf, the APIC detects the End Point and assigns it to its designated EPG. APIC then pushes the required policies as source and destination to the Leaf switch where the new End Point is attached. In other words the APIC manages pushing and enforcing of the policy when an End Point connects.

Traffic Flow Between EPGs

When a new End Point is attached to the ACI Fabric, it follows these steps to its destination.

- The source EPG policy is applied to the End Point as it connects to the Leaf.

- If the destination EPG is known, the policy is enforced. If the destination EPG is unknown, the packet is forwarded to the proxy in the Spine.

- The Spine then looks up its routing table and forwards the packet to its destination Leaf. If a route is not found, the packet is dropped.

- The destination Leaf enforces the policy before the packet exits from the fabric.

As you can see the opportunistic policy is enforced at ingress Leaf, otherwise enforcement is done at egress Leaf.

ACI Fabric Load Balancing and Failover

Most of the traditional Port-Channeling, VPC based load balancing is done in per flow basis. It was not possible to load balance a flow once it is established. In the ACI world, the fabric uses Flowlet Switching, sending packets through different paths independently.

Each Leaf maintains a time stamp for the Flowlet. When packets arrived from multiple paths they are put together in the correct sequence. There is no packet re-ordering needed. In the same fashion, link failover and traffic reroute happen in microseconds.

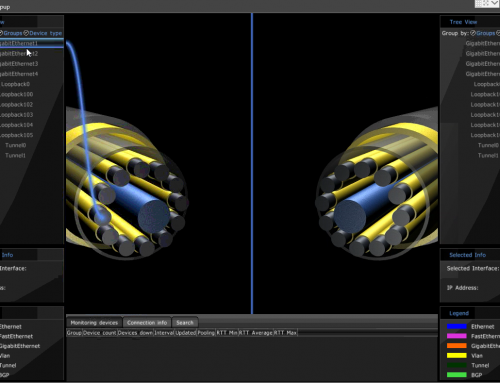

APIC Layer 2 – 7 Visibility

The ACI fabric provides per application, per host and per tenant level analytic visibilities. Within the APIC management console, you can ping, traceroute, check network latency, CPU and RAM utilization on each device, bandwidth per port, packet loss, jitter and network health scores.

ACI Fabric Scalability

The scalability of the fabric is based on the Spine and Leaf design. With this design, we get a linear scale from both performance and cost perspective. It is a cost-effective approach as we grow the network. A network can be as small as less than hundred ports to up to a hundred thousand 10G ports and million end points.

I spent sometime and put together a list of Cisco Nexus switches that support ACI mode. They are in two categories, fixed switch and modular-based platform. Save some time and download the PDF for free.